Research Projects

Funding Body and Partners

Two ARC Discovery projects have been funded for developing an adaptive intelligent deep learning based speech processing system.

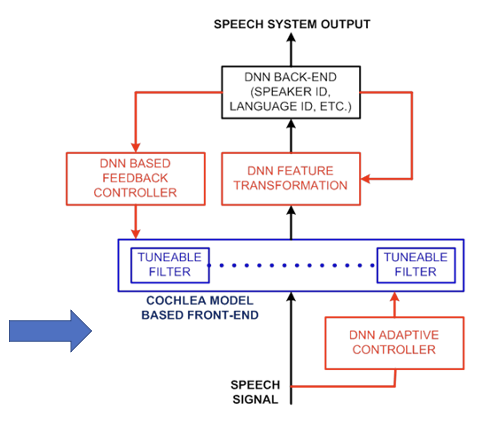

ARC Funded Project 1 (2019-2022)

Integrating biologically inspired auditory model into Deep Learning

The aim of this project is to discover how a biologically inspired auditory model can be tightly integrated into a state-of-the-art deep learning speech processing framework, to model, design and exhaustively verify a deep learning based auditory model. This model aims to exploit both the adaptive properties of auditory models and the learning capabilities of deep learning systems, in order to form a continuously adaptive and interpretable deep learning model.

We will develop an active model of the cochlea that incorporates the level-dependent adaptive gain and adaptive frequency selectivity properties, including adaptive non-linear compression of up to 2:1, into the deep learning paradigm that can serve as the single flexible future front-end for all speech processing systems.

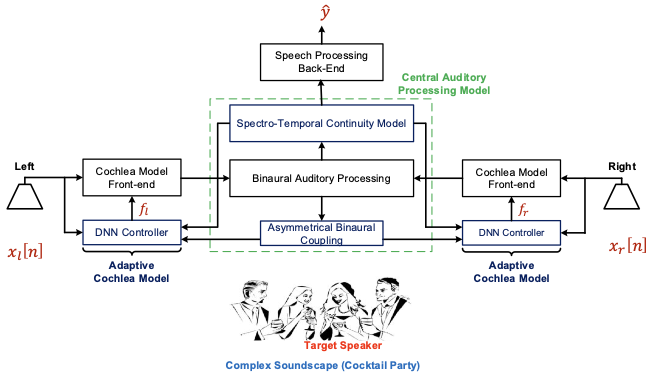

ARC Funded Project 2 (2021-2024):

Biologically Inspired Binaural Coupling for Selective Machine Hearing

The aim of the proposed research is to discover how binaural auditory processing in biological systems can be tightly integrated with machine learning frameworks. We will develop novel models with the necessary adaptive binaural coupling and feedback loops to make them adapt to complex auditory scenes, based on our knowledge of hierarchical auditory processing structures in the human auditory system which exploit complementary information from two ears. This in turn should lead to machine learning systems that are able to mimic the incredible selective hearing ability of humans to allow for selective enhancement, suppression and segregation of sounds in response to complex auditory scenes, and consequently lead to a tremendous increase in robustness.